Prepzee's Cloud Masters program changed my career from SysAdmin to Cloud Expert in just 6 months. Thanks to dedicated mentors, I now excel in AWS, Terraform, Ansible, and Python.

Great learning experience through the platform. The curriculum is updated and covers all the topics. The trainers are experts in their respective fields and follow more of a practical approach.

Nice experience, I will recommend it to all the learners who are willing to join and learn IT skills. I was able to switch my domain from non-IT to IT in a reputed MNC

You’re an IT Professional who is looking for a career in Data Engineering especially dealing with Cloud-based solutions.

You’re looking to switch domains into the Future Proof Data Industry without going into Statistics and coding may start in Data Engineering.

You’re a DBA, with experience in database management and SQL, and can transition into data engineering roles with ease.

You’re a Data Analyst/ Scientist who wants to work with data at a larger scale and manage data pipelines that may transition into data engineering.

Including Top 3 Data Engineering Tools according to Linkedin Jobs

Learn by doing multiple labs in your learning journey.

Get a feel of Data Engineering professionals by doing real-time projects.

Call us, E-Mail us whenever you stuck.

Instructors are Microsoft Certified Trainers.

Attend multiple batches until you achieve your Dream Goal.

Introduction to Python for Apache Spark

Deep Dive into Apache Spark Framework

Mastering Spark RDD’s

Dataframes and SparkSQL

Apache Spark Steaming Data Sources

Core Concepts of Kafka

Kafka Architecture

Where is Kafka Used

Understanding the Components of Kafka Cluster

Configuring Kafka Cluster

Airflow Introduction

Different Components of Airflow

Installing Airflow

Understanding Airflow Web UI

DAG Operators & Tasks in Airflow Job

Create & Schedule Airflow Jobs For Data Processing

Create plugins to add functionalities to Apache Airflow

Introduction of SnowFlake Data Warehousing Service

SnowFlake Architecture

Complete Setup of SnowFlake

Create Data Warehouse on SnowFlake

Analytical Queries on SnowFlake Data Warehouse

Understand the entire Snowflake workflow from end-to end

Undestanding SnowPark (Execute PySpark Application on SnowFlake)

Lab 1 : Explore Compute & Storage options for Data Engineering Workloads

Lab 2 : Load and Save Data through RDD in PySpark

Lab 3 : Configuring Single Node Single Cluster in Kafka

Lab 4 : Run Interactive Queries using Azure Synapse Analytics Serverless SQL Pools

Lab 5 : Data Exploration and Transformation in Azure Databricks

Lab 6 : Explore Transform and Load Data into the Data Warehouse using Spark

Lab 7 : Ingest and Load Data into the Data Warehouse

Lab 8 : Transform Data with Azure Data Factory or Azure Synapse Pipeline

Lab 9 : Real Time Stream Processing with Stream Analytics

Lab 10 : Create a Stream Processing Solution with Event Hub and Databricks

The primary role involves designing, building, and maintaining data pipelines and infrastructure to support data-driven decision-making.

Responsible for integrating data from various sources, ensuring data quality, and creating a unified view of data for analysis.

Designing and managing data warehouses for efficient data storage and retrieval, often using technologies like Databricks, Snowflake and Azure.

Specializing in data engineering within cloud platforms like AWS, Azure leveraging cloud-native data services.

Providing expertise to organizations on data-related issues, helping them make informed decisions and optimize data processes.

Designing the overall data architecture for an organization, including data models, storage, and access patterns.

online classroom pass

Embark on your journey towards a thriving career in data engineering with best Data Engineering courses. This comprehensive program is meticulously crafted to empower you with the skills and expertise needed to excel in the dynamic world of data engineering.Learn Data Engineering with Prepzee, throughout the program, you’ll explore a wide array of essential tools and technologies, including industry favorites like Databricks, Snowflake, PySpark, Azure, Azure Synapse Analytics, and more.Dive into industry projects, elevate your CV and LinkedIn presence, and attain mastery in Data Engineer technologies under the mentorship of seasoned experts.

1.1: Overview of Python

1.2: Different Applications where Python is Used

1.3: Values, Types, Variables

1.4: Operands and Expressions

1.5: Conditional Statements

1.6: Loops

1.7: Command Line Arguments

1.8: Writing to the Screen

1.9: Python files I/O Functions

1.10: Numbers

1.11: Strings and related operations

1.12: Tuples and related operations

1.13: Lists and related operations

1.14: Dictionaries and related operations

1.15: Sets and related operations

Hands On:

2.1 Functions

2.2 Function Parameters

2.3 Global Variables

2.4 Variable Scope and Returning Values

2.5 Lambda Functions

2.6 Object-Oriented Concepts

2.7 Standard Libraries

2.8 Modules Used in Python

2.9 The Import Statements

2.10 Module Search Path

2.11 Package Installation Ways

Hands-On:

3.1 Spark Components & its Architecture

3.2 Spark Deployment Modes

3.3 Introduction to PySpark Shell

3.4 Submitting PySpark Job

3.5 Spark Web UI

3.6 Writing your first PySpark Job Using Jupyter Notebook

3.7 Data Ingestion using Sqoop

Hands-On:

4.1 Challenges in Existing Computing Methods

4.2 Probable Solution & How RDD Solves the Problem

4.3 What is RDD, It’s Operations, Transformations & Actions

4.4 Data Loading and Saving Through RDDs

4.5 Key-Value Pair RDDs

4.6 Other Pair RDDs, Two Pair RDDs

4.7 RDD Lineage

4.8 RDD Persistence

4.9 WordCount Program Using RDD Concepts

4.10 RDD Partitioning & How it Helps Achieve Parallelization

4.11 Passing Functions to Spark

Hands-On:

5.1 Need for Spark SQL

5.2 What is Spark SQL

5.3 Spark SQL Architecture

5.4 SQL Context in Spark SQL

5.5 Schema RDDs

5.6 User-Defined Functions

5.7 Data Frames & Datasets

5.8 Interoperating with RDDs

6.1 Need for Kafka

6.2 What is Kafka

6.3 Core Concepts of Kafka

6.4 Kafka Architecture

6.5 Where is Kafka Used

6.6 Understanding the Components of Kafka Cluster

6.7 Configuring Kafka Cluster

Hands-On:

7.1 Drawbacks in Existing Computing Methods

7.2 Why Streaming is Necessary

7.3 What is Spark Streaming

7.4 Spark Streaming Features

7.5 Spark Streaming Workflow

7.6 How Uber Uses Streaming Data

7.7 Streaming Context & DStreams

7.8 Transformations on DStreams

7.9 Describe Windowed Operators and Why it is Useful

7.10 Important Windowed Operators

7.11 Slice, Window and ReduceByWindow Operators

7.12 Stateful Operators

Hands-On:

8.1 Apache Spark Streaming Data Sources

8.2 Streaming Data Source Overview

8.3 Example Using a Kafka Direct Data Source

Hands-On:

9.1 OLAP vs OLTP

9.2 What is a Data Warehouse?

9.3 Difference between Data Warehouse, Data Lake and Data Mart

9.4 Fact Tables

9.5 Dimension Tables

9.6 Slowly changing Dimensions

9.7 Types of SCDs

9.8 Star Schema Design

9.9 Snowflake Schema Design

9.10 Data Warehousing Case Studies

10.1 Introduction to cloud computing

10.2 Types of Cloud Models

10.3 Types of Cloud Service Models

10.4 IAAS

10.5 SAAS

10.6 PAAS

10.7 Creation of Microsoft Azure Account

10.8 Microsoft Azure Portal Overview

11.1 Introduction to Azure Synapse Analytics

11.2 Work with data streams by using Azure Stream Analytics

11.3 Design a multidimensional schema to optimize analytical workloads

11.4 Code-free transformation at scale with Azure Data Factory

11.5 Populate slowly changing dimensions in Azure Synapse Analytics pipelines

11.6 Design a Modern Data Warehouse using Azure Synapse Analytics

11.7 Secure a data warehouse in Azure Synapse Analytics

12.1 Explore Azure Synapse serverless SQL pool capabilities

12.2 Query data in the lake using Azure Synapse serverless SQL pools

12.3 Create metadata objects in Azure Synapse serverless SQL pools

12.4 Secure data and manage users in Azure Synapse serverless SQL pools

13.1 Understand big data engineering with Apache Spark in Azure Synapse Analytics

13.2 Ingest data with Apache Spark notebooks in Azure Synapse Analytics

13.3 Transform data with DataFrames in Apache Spark Pools in Azure Synapse Analytics

13.4 Integrate SQL and Apache Spark pools in Azure Synapse Analytics

13.5 Integrate SQL and Apache Spark pools in Azure Synapse Analytics

14.1 Describe Azure Databricks

14.2 Read and write data in Azure Databricks

14.3 Work with DataFrames in Azure Databricks

14.4 Work with DataFrames advanced methods in Azure Databricks

15.1 Use data loading best practices in Azure Synapse Analytics

15.2 Petabyte-scale ingestion with Azure Data Factory or Azure Synapse Pipelines

16.1 Data integration with Azure Data Factory or Azure Synapse Pipelines

16.2 Code-free transformation at scale with Azure Data Factory or Azure Synapse Pipelines

16.3 Orchestrate data movement and transformation in Azure Data Factory or Azure Synapse Pipelines

17.1 Optimize data warehouse query performance in Azure Synapse Analytics

17.2 Understand data warehouse developer features of Azure Synapse Analytics

17.3 Analyze and optimize data warehouse storage in Azure Synapse Analytics

18.2 Configure Azure Synapse Link with Azure Cosmos DB

18.3 Query Azure Cosmos DB with Apache Spark for Azure Synapse Analytics

18.4 Query Azure Cosmos DB with SQL serverless for Azure Synapse Analytics

19.1 Secure a data warehouse in Azure Synapse Analytics

19.2 Configure and manage secrets in Azure Key Vault

19.3 Implement compliance controls for sensitive data

20.1 Enable reliable messaging for Big Data applications using Azure Event Hubs

20.2 Work with data streams by using Azure Stream Analytics

20.3 Ingest data streams with Azure Stream Analytics

21.1 Process streaming data with Azure Databricks structured streaming

22.1 Create reports with Power BI using its integration with Azure Synapse Analytics

23.1 Use the integrated machine learning process in Azure Synapse Analytics

24.1 Introduction of Airflow

24.2 Different Components of Airflow

24.3 Installing Airflow

24.4 Understanding Airflow Web UI

24.5 DAG Operators & Tasks in Airflow Job

24.6 Create & Schedule Airflow Jobs For Data Processing

25.1 Snowflake Overview and Architecture

25.2 Connecting to Snowflake

25.3 Data Protection Features

25.4 SQL Support in Snowflake

25.5 Caching in Snowflake

Query Performance

25.6 Data Loading and Unloading

25.7 Functions and Procedures

Using Tasks

25.8 Managing Security

Access Control and User Management

25.9 Semi-Structured Data

25.10 Introduction to Data Sharing

25.11 Virtual Warehouse Scaling

25.12 Account and Resource Management

Our tutors are real business practitioners who hand-picked and created assignments and projects for you that you will encounter in real work.

Ingest data into a data lake and apply PySpark for data integration, transformation, and optimization. Create a system that maintains a structured data repository within a data lake to support analytics

Create a robust data warehousing solution in Snowflake for a retail company. Ingest and transform sales data from various sources, enabling advanced analytics for inventory management and sales forecasting.

Build a comprehensive ETL (Extract, Transform, Load) pipeline that automates the extraction, transformation, and loading of data into a data warehouse. Implement scheduling, error handling, and monitoring for a robust ETL process.

Perform standard DataFrame methods to explore and transform data.Key Points:Create a lab environment. Azure Databricks cluster.

The project includes loading data into Synapse dedicated SQL pools with PolyBase and COPY using T-SQL. Use workload management and Copy activity in an Azure Synapse pipeline for petabyte-scale data ingestion.

The project revolves around building data integration pipelines to ingest from multiple data sources, transforming data using mapping data flows and notebooks, and performing data movement into one or more data sinks.

Enrolling in the Cloud Master Course by Prepzee was a game-changer for me. The comprehensive curriculum covering AWS, DevOps, Azure, and Python gave me a holistic understanding of cloud technologies. The hands-on labs were invaluable, and I now feel confident navigating these platforms in my career. Prepzee truly delivers excellence in cloud education. The balance between theory and practical application was perfect, allowing me to grasp complex concepts with ease. I'm grateful for the opportunity to have learned from industry experts through this course.

Prepzee's Cloud Master Course exceeded my expectations. The course materials were detailed and insightful, providing a clear roadmap to mastering cloud technologies. The instructors' expertise shone through their teachings, and the interactive elements made learning enjoyable. This course has undoubtedly been a valuable asset to my professional growth.

I wanted a comprehensive cloud education, and the Cloud Master Course at Prepzee delivered exactly that. The course content was rich and well-presented, catering to both beginners and those with prior knowledge. The instructors' passion for the subject was evident, and the hands-on labs helped solidify my understanding. Thank you, Prepzee!

Prepzee has been a great partner for us and is committed towards upskilling our employee.Their catalog of training content covers a wide range of digital domains and functions, which we appreciate.The best part was there LMS on which videos were posted online for you to review if you missed anything during the class.I would recommend Prepzee to all to boost his/her learning.The trainer was also very knowledgeable and ready to answer individual questions.

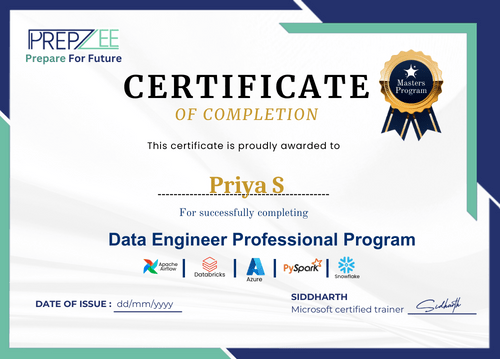

Get Certified after completing this course with Prepzee

-John C. Maxwell

-Malcolm X

-Dhirubhai Ambani

-Mahatma Gandhi

-Mahatma Gandhi

-Narayana Murthy

- Leonardo da Vinci